Porting an unikernel to Xen: Intro

These last few months i have been very busy writing my bachelors thesis with the catchy title Implementing support for the Xen hypervisor in the Unikernel Hermitcore. This turned out to be a very interesting project and i have decided to turn it into a series of blog posts. I will try to explain the conceptual changes that have to be made to an operating system to support running as different types of guests in Xen and show details of the implementation. So without further ado, let’s get started :-)

Table of contents

Intro

My thesis was dedicated to the implementation of the support for the Xen hypervisor in the unikernel HermitCore. HermitCore is a minimalistic operating system, also called Unikernel, developed at the Institute for Automation of Complex Power Systems (ACS) of the RWTH Aachen University. It is specially designed for high performance computing (HPC) and cloud applications. Xen is a hypervisor that allows multiple virtual machines to be run on a single physical computer. It was originally developed as a research project at the British University of Cambridge in 2003 and is today one of the most widely used hypervisors in the public cloud. Originally, HermitCore only supported execution as a virtual machine in KVM, the Linux-integrated hypervisor. Due to the widespread use of Xen in the cloud and the special suitability of HermitCore for cloud applications, the subject of my thesis was chosen. The focus laid mainly on developing a working prototype of HermitCore that can be executed as a virtual machine in Xen. The implementation is based in parts on the reference implementation of Mini-OS. Mini-OS is a minimalistic operating system specially designed to explain the necessary concepts and features needed for an operating system to work as a Xen guest.

This post will first explain some concepts and basics that are helpful in understanding the implementation. Among other things, the conceptual changes that are necessary to make in an operating system as well as the different types of virtual machines that can be executed in Xen. Future posts will then deal in detail with the actual changes to the source code of HermitCore and comparison of the performance of the new implementation with that of the original. The source code discussed in these posts has been developed specifically for HermitCore, but it can certainly serve as a template for other operating systems that are to be ported to Xen.

Xen

Xen is a hypervisor, i.e. software that allows several virtual machines to run on one physical computer. It was originally developed at the British University of Cambridge and is being further developed today by the Linux Foundation with the support of Intel . It is a type 1 hypervisor, also called Virtual Machine Monitor (VMM), that runs directly on hardware. Xen can start several operating systems in virtual machines, the so-called domains. Neither the hypervisor nor other domains are “visible” to these operating systems. In principle, the procedure is comparable with virtual memory and processes. With virtual memory, any process can use the memory as if it were the only process executed by the operating system. More precisely, the hypervisor assigns parts of the entire main memory to the virtual system. These appear to the virtual system as a contiguous address space, just as the physical memory appears to a non-virtual system. It can be used by the virtual system accordingly and exclusively. The first domain started by Xen has a special meaning. This domain is privileged and serves to interact with the actual hypervisor. This privileged domain, called dom0, can start, stop and manage other domains. This management functionality must be integrated into the operating system running in dom0 .

With a traditional hypervisor, the virtual hardware provided to the guest operating system is identical to that of a real machine. This type of virtualization, also called full virtualization, has the advantage that unmodified operating systems can be run on the hypervisor. However, it also has disadvantages because full virtualization support is not part of the original x86 architecture. Among other things, it is very difficult to emulate a Memory Management Unit (MMU). The associated problems can be solved, but the solutions are often at the expense of reduced performance and increased complexity.

Xen originally avoided the problems of full virtualization by providing the guest with hardware that is very similar but not identical to a real machine. This approach is also called paravirtualization. It promises increased performance, but requires changes in the guest operating system. Full virtualization is nevertheless supported because recent advances in the design of x86 CPUs have made it much simpler. They now include instruction set extensions specifically targeted for virtualization that allow virtual machines to manage their own page tables without the support of a hypervisor so it is no longer necessary to emulate a MMU.

Paravirtualization

The changes that must be made to a guest operating system in order to function as a paravirtualized VM in Xen can be roughly divided into three areas. Changes to memory management, the CPU usage and the device I/O.

Memory Management

Memory management is the most difficult part of paravirtualization to implement. Unlike other processor architectures, x86 does not allow the translation lookaside buffer (TLB) to be managed using software. Missing entries in the TLB are automatically entered by the processor by going through all the page tables until the correct one is found. In case of a context switch (e.g. the change from userspace to kernel code) a complete TLB flush must be executed in most cases. This can noticeably reduce the performance, since after each flush all newly looked up page tables have to be re-entered.

To minimize TLB flushes as much as possible, the first 64 megabytes of each address space are reserved for the Xen hypervisor. This prevents a TLB flush from occurring every time the hypervisor is called. In addition, the guest operating system is itself responsible for allocating and managing it’s page tables. Each time the guest needs a new page table, it first has to be initialized and allocated in the guest’s existing address space. Then the page table is passed to Xen. Xen validates it and then inserts it at the desired position in the address space. After a page table has been transferred to Xen, the guest operating system is no longer able to write to it. It has read access only. All changes that are to be made to the table after this point have to be passed to the hypervisor by means of special commands, who then executes them . This generally results in large portions of the memory management code of an operating system having to be rewritten. For FreeBSD, for example, the new code required is about as complex as the original.

The reason for these limitations is the required isolation of the different domains running at the same time. If a domain could have unlimited access to arbitrary memory addresses, they would no longer be isolated from each other.

CPU

The virtualization of the CPU also has an effect on the guest operating system. A x86 CPU provides four privilege levels, also know as rings. Since the hypervisor is the most privileged software running on the physical machine, it is located in ring 0, which usually hosts the OS. The guest operating system on the other hand, is executed on a 32 bit CPU in ring 1 and on a 64 bit CPU in ring 3, where userspace code normally runs. This means that it is no longer able to execute privileged instructions by itself. These instructions, such as creating a new page table directory or pausing the CPU, must instead be executed by the hypervisor. Interrupts and exceptions are also paravirtualized by passing a table containing the respective handlers to Xen. If an exception or interrupt occurs, Xen calls the responsible code directly in the guest OS .

Device I/O

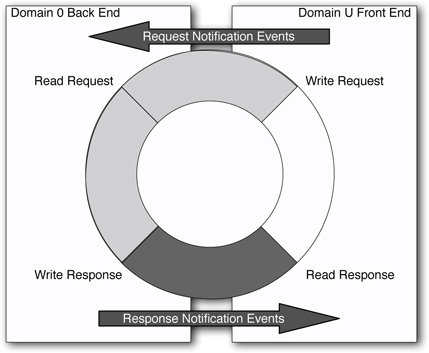

In contrast to full virtualization, where the hardware of the guest operating system is completely emulated, Xen only provides the paravirtualized guest with a series of device abstractions. With these, I/O data is transferred from and to the guest via shared memory pages. So called asynchronous ring buffers are implemented in these pages, which can be used simultaneously by the guest and the hypervisor. A ring buffer can be considered as a circular queue. The access to each ring is provided by two pairs of producer and consumer pointers. If a guest writes data into the ring, he must place his producer pointer at the end of this written data. If the hypervisor reads the data from the ring, he places his consumer pointer at the end of the read data. For data that is passed from the hypervisor to the guest, the whole process works the other way round.

With the help of these simple ring buffers, a number of devices can be supported very efficiently. For example, the network card and the hard disk driver. The virtual console with which a guest can forward terminal output to the control domain is also implemented in this way.

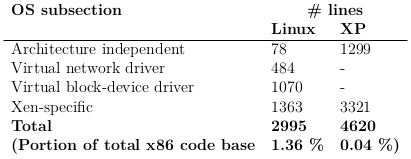

In addition to these major changes some minor features have to be implemented as well. This includes for example the ability to translate machine addresses of the physical machine running Xen, into the virtual physical addresses of the domain. Also the lack of basic hardware such as a programmable interval timer (PIT) makes it necessary to modify the algorithms used for timekeeping among other things. In their original paper on Xen, Barham et al. calculated the cost of porting an operating system to the initial release of Xen by counting the added lines of code.

The initial port of Linux 2.4 to Xen added about 3000 lines of code to the source code, which accounted for about 1.4 % of the total code. However the significance of these metrics is questionable as they do not provide insight in the required complexity.

Fully virtualized guests

The first releases of Xen were not yet capable to run fully virtualized guests. The simple reason for this was that the processors at that time were not able to virtualize x86 in hardware. This changed in 2006 when both Intel and AMD released their instruction set extensions VT-x and AMD-V specifically intended for virtualization. They are both very similar in concept. They added a virtual ring -1 where a hypervisor can be executed. The guest operating system is then able to run normally in ring 0. Another important new feature was the introduction of nested page tables(NPT), which allows a guest to directly manipulate the page table directory in register cr3. The guest only sees the virtual address space to which the hypervisor has assigned him. The hypervisor in turn controls the MMU to manipulate the assignment of the page tables, but does not have to intervene if the guest wants to install new page tables. This is done by a so-called tagged translation lookaside buffer (TTLB). Each entry in the TLB also contains the information which virtual machine it belongs to. So context changes between the virtual machines can take place without flushing the TLB .

In Xen, fully virtualized guests are also called hardware virtual machines (HVM). To provide support for unmodified operating systems, a HVM boots just the same way a real machine does. It starts in 16 bit real mode with paging disabled. The guest operating system is responsible to enable 64 bit protected mode and paging enabled.

To provide a HVM guest with compatible devices, Xen uses QEMU to emulate a whole computer. This includes a BIOS, PIT, PCI-Bus and even graphics hardware. It is also possible for a HVM guest to receive pass-through access to real devices of the underlying PC.

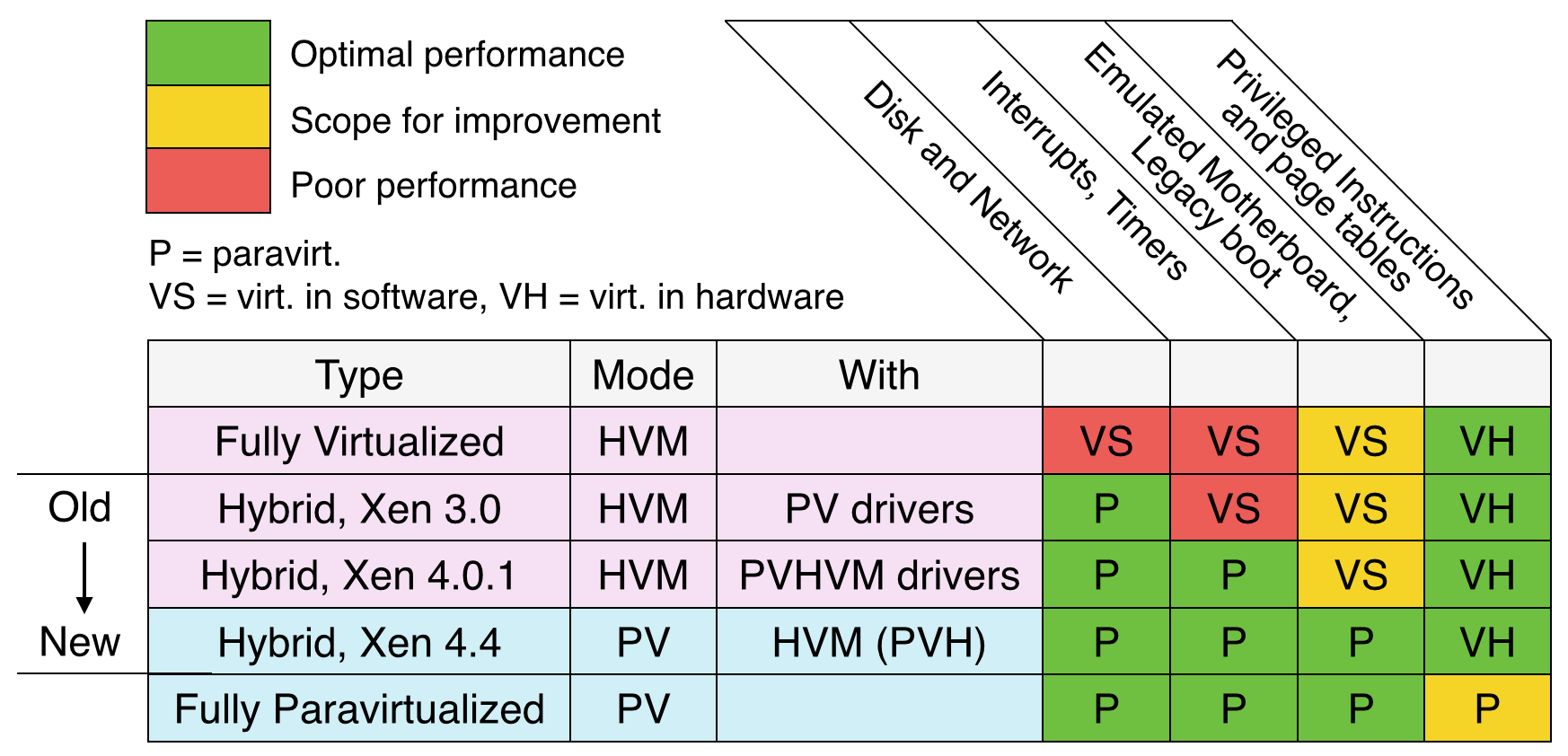

Virtualization Spectrum

Xen provides two main modes of operation, the execution of paravirtualized and fully virtualized guests which can be viewed as two opposing poles in a spectrum of virtualization. Over time, it has become possible to mix them step by step, so that today one can speak of up to five different types of guests that can be executed in Xen. Each step has been taken to come closer to a optimal mode regarding performance of the VM.

HVM with PV drivers

The device drivers of emulated network cards and hard disks are very complex compared to paravirtualized devices. In a first step it is possible to use extra drivers for paravirtualized devices even in an unmodified operating system like Windows. This increases the I/O performance of HVM guests considerably.

PVHVM mode

Even with paravirtualized drivers, some aspects of a completely virtualized guest are still very inefficient. Emulated interrupt controllers, for example, require an intervention of the hypervisor at each interaction. The paravirtualized interfaces of a PV guest are also available in a HVM guest. The operating system only has to “switch them on”. However, the guest still uses an emulated BIOS and starts in 16 bit mode .

PVH

The previous two modes have added features of a PV guest to a HVM guest. PVH, on the other hand, has a PV guest as its base and expands it with the parts of a HVM guest. A PVH guest is initialized in the same way as a PV guest and has no access to emulated hardware. However, it starts in 32 bit mode with paging enabled and not directly in 64 bit mode. In contrast to a PV guest, a PVH guest runs the same way as a fully virtualized guest in ring 0. This gives it direct control over its page tables which has some advantages. The biggest one is that the existing memory management code does not have to be rewritten but can be used without modification .

PVH is a relatively new mode in Xen. As far as performance is concerned, it is exactly at the optimal position between HVM and PV and it is expected to become the de facto standard for virtual machines in Xen .

Unikernels

Unikernels are a new approach to deploying cloud services via applications written in high-level source code. They are single-purpose appliances that are compiled into a standalone kernel that is protected against change when deployed in the cloud.

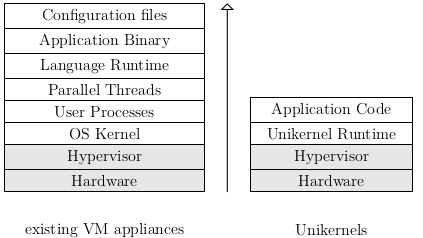

Operating system virtualization with hypervisors such as Xen or VMWare is the basic technology for the cloud. It allows users to distribute virtual machines (VMs) across a cluster of physical machines. Each VM represents a virtual computer running a common operating system such as Linux or Windows, that executes unchanged applications as if it were a real physical machine. While virtualization is a very useful tool, it adds another layer to an already very complicated stack of software that contains many parts, some of which are no longer even useful. For example, to maintain backward compatibility, modern operating systems include drivers for old IDE disks, although they are practically no longer in use .

Most VMs today are used for a single purpose and rarely perform multiple functions at once. Deploying VMs to the cloud or private managed data centers has become such a trivial task, that it is custom to create one VM for each application needed. For example one VM that acts as a web server, another one that hosts a database server and a third one that provides shared storage. Although these VMs only perform a single function, they are still running on general purpose operating systems designed to perform a wide variety of tasks. This in turn results in a variety of computing resources required by these machines, which are not actually used to run the desired application, but are required by the underlying operating system to perform many tasks that are not actually critical to the application being run. Considering that all these extra resources need to be paid for in a cloud environment, this can lead to a rather large overhead of operational costs and a waste of computing resources in general.

This is where the unikernel concept comes in. Instead of using general purpose operating systems for a specific task, each VM is created as a single purpose appliance that removes all additional functions. Unikernels are specialized operating system kernels that are written in a high-level language and function as individual software components. A complete application consists of a series of running unikernels that work together as a distributed system .

They are based on the concept of a library operating system (libOS). In a libOS, the mechanisms required to provide basic operating system functionality such as hardware control or network communication are implemented in a number of libraries. These libraries, along with the application to run, are compiled into a single bootable VM image that runs directly on a hypervisor. This results in very compact images, often only a few hundred kilobytes in size, which greatly speeds up deployment to data centers over the Internet. The boot time is also usually less than a second, making it possible to start a unikernel in response to incoming network traffic .

In addition to a smaller size, much faster boot time and fewer needed resources, unikernels also provide a higher level of security than standard operating systems. Any code not present in the unikernel at compile time will never be run, completely preventing code injection attacks.

HermitCore

HermitCore is a unikernel operating system designed for high performance computing (HPC) and cloud environments. It is being developed at the Institute for Automation of Complex Power Systems at RWTH-Aachen University and extends the multi-kernel concept with a unikernel in order to improve the programmability and scalability of HPC systems. HermitCore is an extremely small 64 bit kernel with basic operating system functionalities such as memory management and priority based scheduling. It is designed for Intel’s 64 bit processors and supports their extended instruction set for multiprocessing .

HPC systems typically consist of a large number of CPU cores. In the multi-kernel approach, a standard operating system such as Linux is used on a small subset of the available CPU cores to provide basic services such as a filesystem. The remaining cores run a specialized lightweight kernel (LWK) which is designed from scratch. They typically implement the POSIX API to simplify software development and support only a limited amount of hardware devices. System calls have to be delegated to the Linux kernel to allow the execution of traditional software.

HermitCore combines this multi-kernel approach with unikernels. In contrast to other approaches, most system calls are handled by the unikernel and only basic functionalities have to be delegated to Linux. This makes the need for POSIX compliance in HermitCore obsolete and it can focus on the support of other typical HPC programming models such as message passing . In addition to running as a multi-kernel alongside Linux, HermitCore can also be used as a classical standalone unikernel in a single VM or on real hardware.

Building applications for HermitCore is simplified through the use of a cross toolchain. The popular GNU binutils and GNU Compiler Collection (gcc) were modified to support a target for HermitCore, and thus applications for HermitCore can be written in all languages supported by gcc. This includes C, C++, Fortan and even the Go runtime. The use of this toolchain allows various applications that are already in use, to be ported to HermitCore without much effort. They are compiled into a single standalone Executable and Linking Format (ELF) binary which can be started in a virtual machine or even on real hardware.

The goal of the implementation in my thesis was to make a standalone HermitCore unikernel run as a virtual machine on the Xen hypervisor.

Conclusion

That’s it for the more theoretical part of my thesis. I explained in some detail what Xen, Unikernels and Hermitcore are and also talked about the necessary conceptual changes that have to be made to an operating system to work as a paravirtualized guest in Xen. As you will see in the following posts, running any OS fully virtualized in Xen is rather easy but when it comes to paravirtualization there are a lot of changes necessary to make it work.

I hope you found this post interesting and will be back for the next part where i will show what has to be done to make Hermitcore run as a HVM guest in Xen.

So long, Jan :-)

Further reading

© 2024 JanMa's Blog ― Powered by Jekyll and hosted on GitLab