Porting an unikernel to Xen: PVH guest

Welcome back to the fourth part of my blog post series. Last time i wrote about my process of trying to get HermitCore working as a fully paravirtualized PV guest in Xen. Unfortunately this didn’t work out for a number of reasons. The main one being hat i would have had to rewrite the memory management code of HermitCore. So i decided to try to get it working as a PVH guest. This worked out quite nice as you will read in this post. Once again, all source code discussed here can be found in a separate branch on GitLab.

Table of contents

- Booting

- Initializing Xen features

- Creating multiboot information

- Console output

- Completing startup

- Conclusion

The third guest type i discussed in my thesis is called a PVH guest. It can be viewed as a kind of hybrid between a HVM and a PV guest.

They work almost exactly as PV guests with one major exception: a PVH guest runs in ring 0 and has direct control over it’s page tables. This has several advantages. One of the biggest efforts when porting an operating system to Xen is the page table management code. As mentioned in the previous post, a lot of effort would have been needed to modify the existing page table and memory management code to work with the restrictions placed on a PV guest by Xen. In contrast, there is no special code needed for a PVH guest. It does need some modifications to work correctly with the Xen hypervisor but by far not as many as a PV guest.

Booting

When starting a PVH guest, Xen defines the following register state:

-

ebx

contains the physical memory address where the loader has placed the boot start info structure. -

cr0

bit 0 (PE) must be set. All the other writable bits are cleared. -

cr4

all bits are cleared. -

cs

must be a 32 bit read/execute code segment with a base of ‘0’ and a limit of ‘0xFFFFFFFF’. The selector value is unspecified. -

ds, es

must be a 32 bit read/write data segment with a base of ‘0’ and a limit of ‘0xFFFFFFFF’. The selector values are all unspecified. -

tr

must be a 32 bit TSS (active) with a base of ’0’ and a limit of ’0x67’. -

eflags

bit 17 (VM) must be cleared. Bit 9 (IF) must be cleared. Bit 8 (TF) must be cleared. Other bits are all unspecified.

All other processor registers and flag bits are unspecified. The OS is in charge of setting up it’s own stack, GDT and IDT. Xen starts the PVH guest in 32 bit mode with paging enabled, so the guest also has to provide a 32 bit entry point to Xen with the help of an ELF note. The domain builder will jump directly to the specified address in the boot code.

ELFNOTE "Xen",XEN_ELFNOTE_PHYS32_ENTRY,start

When HermitCore is not booting directly into 64 bit mode, it first has to run the included loader. Therefore all changes in the boot code have to be implemented in the entry.asm file of the loader.

The address of the start info struct gets passed to the guest in the ebx register instead of the multiboot information. This has to be saved. In addition only the mentioned ELF note has to be added. The resulting changes in entry.asm are therefore very minimal.

SECTION .mboot

global start

start:

cli ; avoid any interrupt

jmp stublet

...

SECTION .text

ALIGN 4

stublet:

; Initialize stack pointer

mov esp, boot_stack

add esp, KERNEL_STACK_SIZE - 16

; Safe Xen start info

mov DWORD [xen_start_info], ebx

...

; jump to the boot processor's C code

extern main

jmp main

jmp $

align 4096

global shared_info, hypercall_page

shared_info:

times 512 DQ 0

hypercall_page:

times 512 DQ 0

SECTION .data

global mb_info, xen_start_info

ALIGN 8

mb_info:

DQ 0

xen_start_info:

DQ 0

A special hypercall_page and shared_info page have been added again as described in the previous post. Similar to a PV guest, the first thing a PVH guest has to set up are hypercalls and the shared_info page.

Initializing Xen specific features

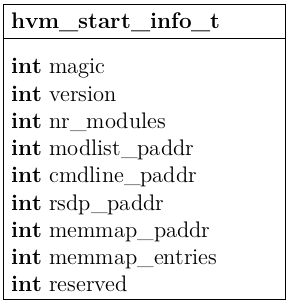

Setting these up works a little different than in a purely paravirtualized guest. Hypercalls have to be specifically enabled and mapping the shared_info page requires a different hypercall. In addition, a hvm_start_info struct is passed to the guest instead of the start_info_t struct described previously.

The values in this struct are almost completely different to the values of the start_info_t struct but are nevertheless very important for the following steps in the boot process.

Hypercalls

As opposed to a PV guest, a PVH guest has to enable hypercalls before it can use them. The following code has been taken from Mini-OS.

#define XEN_CPUID_FIRST_LEAF 0x40000000

/*

* Leaf 1 (0x40000x00)

* EAX: Largest Xen-information leaf. All leaves up to an including @EAX

* are supported by the Xen host.

* EBX-EDX: "XenVMMXenVMM" signature, allowing positive identification

* of a Xen host.

*/

#define XEN_CPUID_SIGNATURE_EBX 0x566e6558 /* "XenV" */

#define XEN_CPUID_SIGNATURE_ECX 0x65584d4d /* "MMXe" */

#define XEN_CPUID_SIGNATURE_EDX 0x4d4d566e /* "nVMM" */

static inline void wrmsrl(unsigned msr, uint64_t val)

{

wrmsr(msr, (uint32_t)(val & 0xffffffffULL), (uint32_t)(val >> 32));

}

static void hpc_init(void)

{

uint32_t eax, ebx, ecx, edx, base;

for ( base = XEN_CPUID_FIRST_LEAF;

base < XEN_CPUID_FIRST_LEAF + 0x10000; base += 0x100 )

{

cpuid(base, &eax, &ebx, &ecx, &edx);

if ( (ebx == XEN_CPUID_SIGNATURE_EBX) &&

(ecx == XEN_CPUID_SIGNATURE_ECX) &&

(edx == XEN_CPUID_SIGNATURE_EDX) &&

((eax - base) >= 2) )

break;

}

cpuid(base + 2, &eax, &ebx, &ecx, &edx);

wrmsrl(ebx, (unsigned long)&hypercall_page);

barrier();

}

To enable hypercalls, the guest first has to issue cpuid commands, until the register ebx, ecx and edx contain the XEN_CPUID_SIGNATURE values defined in the above listing. Then it can issue a wrmsr command to tell the hypervisor the address of it’s desired hypercall_page.

Shared info page

A PVH guest doesn’t have access to the same hypercalls a PV guest has. To map the shared_info page the HYPERVISOR_memory_op hypercall has to be used. It sets the page frame number (PFN) at which a specific page should appear in the guest’s address space.

shared_info_t *map_shared_info(void *p)

{

struct xen_add_to_physmap xatp;

xatp.domid = DOMID_SELF;

xatp.idx = 0;

xatp.space = XENMAPSPACE_shared_info;

xatp.gpfn = PFN_DOWN((size_t)&shared_info);

if ( HYPERVISOR_memory_op(XENMEM_add_to_physmap, &xatp) != 0 )

asm volatile ("hlt");

return &shared_info;

}

The hypercall takes a xen_add_to_physmap struct and an operation as arguments which are defined in the memory.h header file.

Creating a multiboot information struct

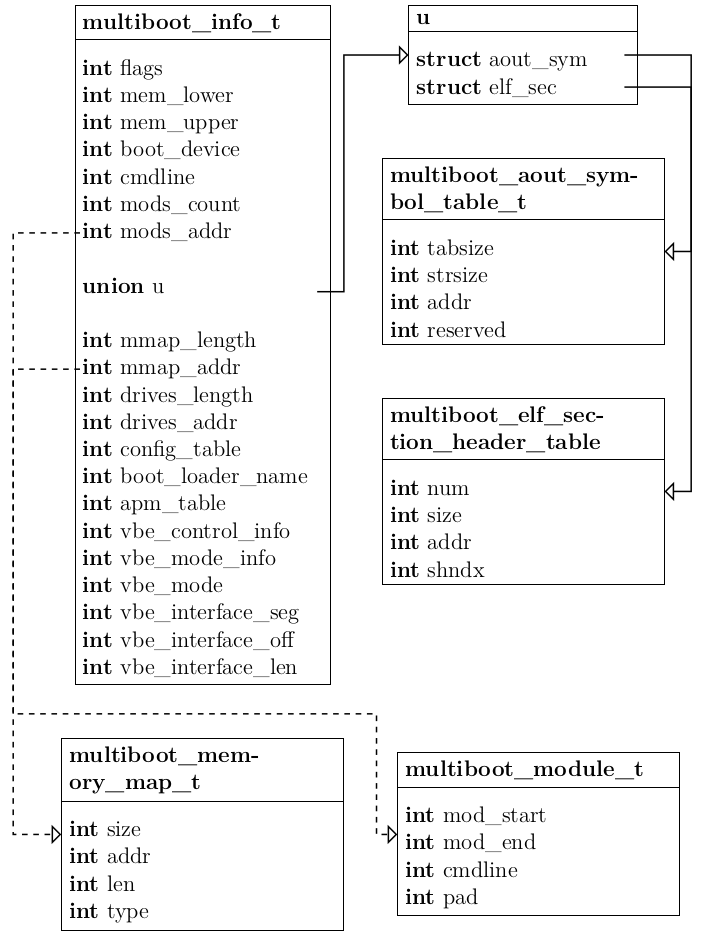

As mentioned previously, HermitCore always expects a multiboot_info_t struct in the ebx register when booting. It contains a lot of important information about the environment HermitCore is running in and is needed in the whole startup process. Considering that Xen does not pass such a struct to the guest on startup, there are two possible ways to obtain the needed information.

One would be to rewrite the parts of the code that rely on the multiboot_info_t struct, to use information provided by Xen instead. This would have to be done for the loader and the kernel and would take a lot of changes. The second way is to simply gather all the information needed once at startup and create a multiboot_info_t struct, which then can be passed to the code that needs it. In view of it’s simpler implementation, i chose the second method and implemented a build_multiboot() function in the loader’s main.c file.

The multiboot_info_t struct is a rather large and complex structure which consists out of many variables and pointers to other structures. It’s structure can be seen above. Fortunately, HermitCore does not need all of the information which could be included in this struct. The build_multiboot function only has to create a multiboot_memory_map_t struct, a multiboot_module_t struct and set the correct flags. In addition to that, the function also calculates the CPU frequency with the help of the shared_info page.

Flags

The following flags need to be set for HermitCore to work properly:

-

MULTIBOOT_INFO_MEMORY

Information about the available memory is provided -

MULTIBOOT_INFO_CMDLINE

Extra command line options are defined. -

MULTIBOOT_INFO_MODS

There are modules passed to the operating system -

MULTIBOOT_INFO_MEM_MAP

A full memory map is provided

To set these flags, the bits 1, 3, 4 and 7 have to be set to one in the flags variable of the multiboot_info_t struct

mb_tmp.flags = 0x00000001 | 0x00000004 | 0x00000008 | 0x00000040;

It is not needed to pass additional command line options to the kernel so the cmdline variable can be set to zero.

Memory map

To create a multiboot_memory_map_t struct the guest first has to issue the HYPERVISOR_memory_op hypercall to get the memory mapping from Xen. The hypercall returns a e820entry struct which has to be translated into a multiboot_memory_map_t struct.

/* PC BIOS standard E820 types and structure. */

#define E820_RAM 1

#define E820_RESERVED 2

#define E820_ACPI 3

#define E820_NVS 4

#define E820_UNUSABLE 5

#define E820_PMEM 7

#define E820_TYPES 8

struct __attribute__((__packed__)) e820entry {

uint64_t addr;

uint64_t size;

uint32_t type;

};

Translating it is done in the following way:

//get memory map from Xen

struct xen_memory_map mmap;

mmap.nr_entries = E820_MAX;

mmap.buffer = e820_map;

int rc = HYPERVISOR_memory_op(XENMEM_memory_map, &mmap);

if (rc){

kprintf("Getting mmap failed!\n");

HALT;

}

kprintf("Memmap nr_entries: %d\n", mmap.nr_entries);

for ( int i = 0; i < mmap.nr_entries ; i++){

kprintf("size: %ld addr: %lx type: %d\n", e820_map[i].size, e820_map[i].addr, e820_map[i].type);

mboot_mmap[i].len = e820_map[i].size;

mboot_mmap[i].addr = e820_map[i].addr;

mboot_mmap[i].type = e820_map[i].type;

mboot_mmap[i].size = sizeof(multiboot_memory_map_t)-sizeof(uint32_t);

}

mb_tmp.mmap_addr = (multiboot_uint32_t)&mboot_mmap;

mb_tmp.mmap_length = mmap.nr_entries * sizeof(multiboot_memory_map_t);

kprintf("mmap: 0x%lx mmap_length: 0x%lx\n", mb_tmp.mmap_addr, mb_tmp.mmap_length);

Multiboot module information

When starting HermitCore as a PVH guest, Xen will first load the binary file of the HermitCore loader and pass the actual application that is intended to run as a ram disk. This is very similar to a HVM guest, where the application gets passed as a multiboot module directly. The virtual memory layout of a PVH guest is almost the same as for a PV guest, which is described here. The initial ram disk is located just after the relocated kernel image, which means that the ram disk starts at the beginning of the first page after the HermitCore loader. The correct start address can be determined by using the provided kernel_end variable, which contains the address where the loader ends, and calculating the start address of the next page from there.

The size of the ram disk can be calculated in a similar matter. The magic variable inside the hvm_start_info_t struct contains the address where the struct is located. Subtracting the start address of the ram disk from this address provides the size of the ram disk. This works because a PVH guest is not passed a list of allocated page frames so the hvm_start_info_t struct is located just behind the initial ram disk.

These two values are all that is needed by the build_multiboot function to create a multiboot_module_t struct.

// calculate the next page frame

uint64_t mod_addr = pfn_to_virt(PFN_UP((uint64_t)&kernel_end));

// calculate the size of the ram disk

uint64_t mod_len = (uint64_t)start_info_ptr->magic - mod_addr;

kprintf("module0 addr: 0x%lx\n", mod_addr);

kprintf("module0 len: 0x%lx\n", mod_len);

mmod[0].mod_start = mod_addr;

mmod[0].mod_end = mod_addr + mod_len;

mmod[0].cmdline = 0;

mmod[0].pad = 0;

mb_tmp.mods_count = 1;

mb_tmp.mods_addr = (multiboot_uint32_t)&mmod;

CPU frequency

The last thing the build_multiboot function has to do, is to calculate the CPU frequency. Strictly speaking, this is not part of the multiboot_info_t struct but is nevertheless needed by HermitCore. Determining it at this point in the startup process is very convenient so that is why it is included into the build_multiboot function.

The equation to calculate the CPU frequency is very similar to the one used to calculate the current system time in a PV guest.

frequency=((10^9 << 32) ÷ tsc_to_system_mul) >> |tsc_shift|

The values needed can be gathered from the shared_info_t struct.

Console output

In theory it should have been possible to make a PVH HermitCore guest use the same virtual console a PV guest uses. Mapping the required shared pages and initializing events to communicate with the control domain works almost the same way. The necessary functions to initialize the console device and write data to it are also included in the xen_pvh branch but are not used. The reason for this is because when trying to write output to the virtual console, the data gets written correctly into the mapped shared memory page but the control domain is not notified and therefore displays no output.

Fortunately Xen provides a second way to display the output of a guest. There exists a HYPERVISOR_console_io hypercall which can be used to print data to an emergency debug console. To have access to this debug console, Xen has to be compiled from source with debug support enabled. I will provide a short description on how to do this in a following post.

Writing data to the emergency console is very easy. The HYPERVISOR_console_io hypercall takes three arguments to work.

HYPERVISOR_console_io(CONSOLEIO_write, strlen(buf), buf);

The first instructs the hypervisor to write data to the console. The second and third argument contain the string to be written and it’s length. For a more convenient usage i implemented a printf-like function xprintk in the same way as for a PV guest.

To read the data from the emergency console, the xl dmesg command has to be used inside the control domain, it will print all data written to the emergency console.

Completing startup

With the above features implemented, the HermitCore loader is able to complete it’s startup process and load the application binary. The kernel code doesn’t need much additional changes. After starting the application binary, hypercalls have to be enabled again to provide the possibility of console output and the shared_info page has to be mapped again. This works exactly the same way as in the loader. In addition to that, the detection of PCI and UART devices and the initialization of the network were disabled.

A PVH guest does not have access to any emulated hardware devices, including PCI and UART devices. So trying to initialize them only takes unnecessary time in the boot process (since none cane be found). This is the same reason why the network initialization is disabled. At the time of writing it is not possible to use networking when running HermitCore as a PVH guest on Xen. The drivers necessary to make it work, need to be ported from Mini-OS or Linux to HermitCore.

Console output from an application

To enable console output from applications to the emergency console, a small trick has been used. Normally, C-programs use printf or similar functions to display text output inside a terminal window. When they are compiled into HermitCore, they get linked against HermitCore’s included C library newlib. Inside this library, the function putchar, which is used by printf and similar functions to write a character to the standard output, is implemented in a way that each character that would be written to the terminal, gets written to the UART device instead. This is done by calling the write_to_uart function which is included in HermitCore. By modifying this function to also write the passed characters to the Xen emergency console, C-programs can still use the standard functions to print text.

static inline void write_to_uart(uint32_t off, unsigned char c)

{

while (is_transmit_empty() == 0) { PAUSE; }

if (uartport)

outportb(uartport + off, c);

// also write the output to Xen's emergency console

xprintk("%c", c);

}

Conclusion

A HermitCore PVH guest is working almost completely. There are only a few things missing that have to be implemented in the future, to consider it working 100 %. These include:

-

a working virtual console

-

working networking

-

support for multiple CPUs

The performance of a PVH guest is astonishing. It boots as fast as a HermitCore guest running on the KVM hypervisor even though it is a virtual machine, running inside a virtual machine running on Linux. The decision to move the focus of the implementation away from a purely paravirtualized PV guest to a hybrid PVH guest has clearly paid off. I will provide a more detailed performance comparison in the next post. I hope i kept it interesting and that you will be back next time.

Until then, Jan

Further reading

© 2024 JanMa's Blog ― Powered by Jekyll and hosted on GitLab